L’entropie, tu as dit “l’entropie”? Qu’est-ce que c’est?

Je vais te répondre. Et puisque j’ai répondu mal à cette même question autrefois, je vais tenter de le faire bien cette fois-ci. On va voir que l’entropie est essentiel pour comprendre pourquoi certaines choses arrivent et d’autres pas. Par exemple, un œuf entier peut devenir un œuf cassé (bien trop facilement), tandis qu’on n’a jamais vu le contraire. Et ce concept va très loin.

Pour dévoiler la fin, l’entropie sert à mesurer le désordre . La nature cherche le désordre, donc la plus grande entropie. L’entropie sert donc à prévoir dans quelle direction un processus va se dérouler. Celui qui a compris ça peut laisser tomber le reste de cet article. Autrement, on continue.

L’entropie est un concept, une construction mathématique, souvent calculé comme le quotient de deux autres chiffres ou par le formule qu’on va voir tout à l’heure. (Par exemple, dans un processus où de l’énergie calorifique (chaleur) est transférée à un objet, l’entropie du processus est la quantité de chaleur divisée par la température (S = Q/T).) On ne peut pas tenir un bout d’entropie dans la main, ni même le ressentir, comme le chaud ou le froid. Il n’y a pas d’entropie-mètre. Mais malgré le caractère abstrait de l’entropie, on peut en avoir une compréhension qualificatif et utile.

L’entropie a été étudié avec différents buts, par exemple, construire le meilleur machine thermique possible. Différents buts on donné parfois des aspects différents de l’entropie. Mais on a démontré qu’ils sont tous compatibles. Il y a même une entropie qui correspond à l’information. J’avoue ne pas comprendre cela.

L’entropie est une notion centrale de la branche de la physique qui s’appelle la thermodynamique. La thermodynamique est la science de toutes les formes d’énergie. Donc, elle sous-tend toutes les sciences. Quant à l’énergie, c’est la capacité de faire du travail, par exemple, de déplacer ou de soulever un objet lourd. Une autre forme de “travail” pour laquelle l’énergie est nécessaire est le déplacement d’électrons dans un fil (Ça s’appelle courant électrique.) ou le déplacement des produits chimiques chargés dans le système nerveux. Il y en a bien d’autres.

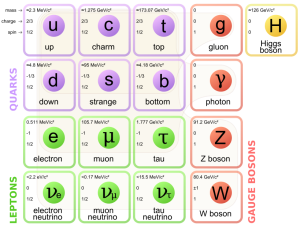

Les sciences biologiques, chimiques et physiques démontrent que toute chose est composé d’autres choses plus petites, à quelques exceptions près (les particules les plus élémentaires, comme les quarks). Même un champ électromagnétique, comme la lumière, est composé de photons. Pour comprendre le mot « composé », on peut imaginer un ensemble de molécules ou de grains de quelque chose. Les gourmands peuvent penser à une sauce, avec des molécules d’eau, de vin, de grains de poivre, d’ions de sel, de molécules d’arômes de champignon, de basilic et d’autres bonnes choses. Yum ! (En français, cela se dit « miam ».)

Maintenant, retournons à l’entropie. Il existe plusieurs manières d’expliquer l’entropie, mais la plus intuitive est certainement la suivante.

L’entropie est la probabilité5 que quelque-chose de composé existe ou a lieu dans un certain état, c’est à dire, avec une certaine configuration ou ordre de ses composants.

Les chaussettes de Paul

Des exemples, tu veux des exemples. D’accord, OK…

J’ai un copain dont la mère a été très dure avec lui pendant son enfance. Je vais te l’expliquer. Elle a insisté pour qu’il range ses chaussettes bleues pâles à gauche dans un tiroir de sa commode et ses chaussettes roses à droite dans ce même tiroir. Tu comprends tout de suite combien cela a tracassé le pauvre Paul. Alors, l’entropie là-dedans ? Simple, mon cher.

Pour simplifier, imaginons que Paul à trois paires de chaussettes, trois chaussettes bleues pâles et trois roses. (Il portait toujours des chaussettes de deux couleurs différentes. (Il ne m’a pas dit s’il portait une couleur spécifique à chaque pied et j’ n’ai pas osé lui poser la question.)) Le jour du lavage, il peut ranger ses chaussettes propres comme on va expliquer maintenant.

Commençons par les chaussettes bleues pâles et supposons qu’elles sont numérotées pour qu’on puisse en parler individuellement. (Ce calcul n’est pas rigoureux, mais cela donne l’idée.) Pour la première à ranger, Paul peut choisir le numéro un, le numéro deux ou le numéro trois, ce qui fait trois choix possibles. Après ce choix difficile, il ne lui reste que deux chaussettes bleues pâles. Donc, il n’y a que deux choix, le numéro deux ou le numéro trois, s’il a déjà pris le numéro un. Et pour la troisième, il n’a qu’un seul choix, celle qui reste. Donc il y a trois choix pour la première. Pour chaque premier choix, il y a deux choix pour le deuxième, ce qui fait six choix possibles en tout (deux pour chacun des trois, 3 fois 2 fait 6.). Et pour le dernière, il ne reste qu’une chaussette et donc un choix. C’est pareil pour les chaussettes roses. Donc, en tout, il a douze choix, six pour les bleues pales et six pour les roses. Ça fait 12 façons de ranger les chaussettes dans l’ordre. Note bien que, puisque les chaussettes ne sont pas vraiment numérotées, la mère de Paul, en contrôlant l’obéissance de son fils, ne peut pas savoir laquelle des 12 choix il a fait mais voit seulement que les chaussettes sont bien ordonnées comme elle veut.

Un jour, dans un accès de désespoir, Paul a enlevé le tiroir et le secoué violemment pendant une minute ou deux avant de se calmer. Dans quel état étaient les chaussettes après? Un peu partout, ou toutes mélangées, n’est-ce pas? C’est que, lorsque Paul a secoué le tiroir, l’entropie a frappé ! Maintenant, les chaussettes sont dans un désordre assez complet. Regardons comment on peut le faire du départ, le même jour de lavage. Maintenant, n’importe quelle chaussette peut aller n’importe où. Donc, pour la première, Paul peut prendre n’importe laquelle des six – six choix. Pour la deuxième choix, il lui reste cinq chaussettes, donc cinq choix pour chacun des six choix de la première chaussette. Ça fait déjà 6 fois 5, ou 30 choix seulement pour les deux premières chaussettes. Évidemment, pour la troisième il reste quatre chaussettes, donc le même nombre de choix. On est à 6 fois 5 fois 4 = 120 choix déjà et il nous reste 3 chaussettes. Nous savons déjà que trois chaussettes peuvent être choisies de six façons, donc la totale est de 120 fois 6 ou 720 choix !

Il y a donc 12 choix possibles pour ranger les chaussettes dans l’ordre par couleur bleue pale ou rose et 720 manières de le faire dans le désordre. La mère de Paul ne distingue pas entre différents choix ordonnés ou différents choix non-ordonnés, mais elle voit trop bien la différence des états des chaussettes. Lequel est le plus probable ? Évidemment, le désordre, par 720 contre 12. Et l’entropie est une mesure du ce désordre.

Bien entendu, les physiciens sont des gens sérieux et ne parlent pas (beaucoup) de l’entropie d’un tiroir à chaussettes, mais ils parlent bien de l’entropie des molécules, par exemple, celles d’un gaz. Là, où on peut avoir un échantillon de quelque chose comme 1023 molécules, le désordre prime de loin sur l’ordre. Encore, on parle du nombre de micro-états différents qui correspondent au même macro-état.

Les billes de Raoul

Raoul a une boîte pleine de billes identiques . Supposons qu’il échange deux billes. On peut spécifier la localisation de chaque bille individuellement et parler donc de l’état détaillé (le micro-état) originel et de l’état détaillé après l’échange des deux billes. Les micro-états sont différents. Mais vu par le fier propriétaire de la boîte de billes (le macro-état), rien n’a changé : Il est incapable de distinguer entre les deux micro-états. De cette façon, on peut avoir un grand nombre de micro-états différents qui sont vu de l’extérieur comme étant le même macro-état. Plus qu’il y a de billes, plus sera le nombre de combinaisons qui sont identiques pour Raoul et plus grand sera l’entropie de la boîte de billes.

Comme c’est simple !

Les principes de la thermodynamique

Le physicien qui a conçu cette façon de définir l’entropie s’appelait Ludwig Boltzmann et sur sa pierre tombale il y a le formule qu’il a présenté au monde :

S = k logW

où S est l’entropie, k est un constant nommé après Mr Boltzmann, et W est le nombre de façons possibles de micro-systèmes (12 ou 720 pour les chaussettes) qui correspondent au même macro-système (le tiroir à chaussettes). Autrement dit, plus le nombre de micro-systèmes est grand, plus l’entropie est grande, puisqu’elle est proportionnelle au logarithme du nombre de micro-états. Une plus grande entropie correspond à un plus grand degré de désordre.

Mais pourquoi parle-t-on de l’entropie, à quoi ça sert ? Eh bien, parce que la deuxième principe de la thermodynamique dit :

Dans un processus physique, l’entropie de l’univers augmente toujours.

C’est assez évident quand on pense à l’aventure de Paul lorsqu’il secoue le tiroir à chaussettes. Pour info, la première principe dit :

L’énergie de l’univers ne change pas ; elle est toujours conservée.

Ceci est la très renommée loi de la conservation de l’énergie. (En anglais, on dit plutôt « lois », mais en français on dit « principes » pour rappeler qu’elles ne peuvent pas être prouvées.) Il y a une troisième principe, qui serait plus longue à expliquer, mais citons le :

L’entropie d’un système à une température de zéro absolu est zéro.

(Au cas où, zéro absolu est zéro Kelvin, ou -273,15° Celsius.) Mais ce n’est pas tout. Après tout ça, quelqu’un a remarqué qu’il devrait exister une autre principe encore plus fondamentale que les trois autres, ce qui fait des principes de la thermodynaique une trinité de quatre principes ; on l’a donc baptisé la zéroième principe :

Si un système A est en équilibre thermique avec le système B, et le système B est en équilibre thermique avec le système C, alors les systèmes A et C sont aussi en équilibre thermique.

Eh alors, tu dis ? Cela veut dire qu’il y a un moyen de savoir, de mesurer quel est l’état thermique d’un système. Ça pourrait être le système B, où B est tout simplement un thermomètre, qui est en équilibre thermique avec A et C, où « être en équilibre thermique » signifie que les deux ont la même température. C’est très important. De quoi parlerait-on si l’on n’avait pas la zéroième principe ? « Je pense que le logarithme de l’entropie va augmenter demain. » O, non !

La thermodynamique est supposée être valide partout dans l’univers sauf pour les objets très petits comme les particules élémentaires (les protons, électrons, quarks, etc). Les physiciens savent parfaitement que les théories d’aujourd’hui seront modifiées et amplifiées par celles de demain … sauf pour la deuxième principe de la thermodynamique. On y croit dur comme le fer !

Encore plus sur le désordre

La femme de Paul, Drusilla, se souvient souvent de ses vacances d’enfance au bord de la mer et des châteaux de sable qu’elle aimait y construire. De beaux châteaux, avec des douves, des pont-levis, des mâchicoulis et une énorme donjon au centre. Considérons ça.

Lorsque Drusilla construisait un château de sable, elle plaçait des petites quantités de grains exactement là où elle les voulait. Puisqu’elle est très minutieuse, c’est comme si elle plaçait chaque grain à un endroit spécifique de l’édifice et pas n’importe où. Rien n’était laissé au hasard. Mais lorsque la mère de Drusilla l’a appelée pour venir manger et se coucher, elle se mettait au lit avec tristesse, parce qu’elle savait ce qui l’attendrait le lendemain matin. Se levant tôt et courant en pyjama à la plage avec la lumière du soleil matinal aux yeux et les cris stridents des mouettes aux oreilles, elle arrivait à son château … ou ce qui en restait … et trouvait que la marée (assistant de l’entropie) avait fait son boulot. Le beau château si bien ordonné était réduit à un tas difforme de sable mouillé. De la boue. Mais Drusilla était courageuse – ou têtue, si on préfère – elle mangeait son petit déjeuner et elle recommençait.

Après avoir vu le cas des chaussettes de Paul, on comprend bien que les choix de destination des grains de sable de Drusilla était similaire, même si les grains étaient bien plus nombreux que les chaussettes et les destinations (mur, donjon, etc. mais pas les douves) plus nombreux que les deux côtés du tiroir. Elle plaçait les grains, comme déjà dit, afin de créer de l’ordre. Par contre, si elle avait simplement jeté les grains n’importe où sur le site du futur non-château, elle aurait formé un tas assez similaire à celui laissé par la marée, en désordre – et donc de plus grande entropie.

Remarquons donc :

- La nature a choisi l’état du plus grand désordre ; en ce faisant, elle a suivi la deuxième principe et a fait augmenter l’entropie de l’univers.

- Drusilla a du travailler, c’est à dire, a dépenser de l’énergie, pour construire la version ordonnée du château. Même si elle a réduit un peu l’entropie de l’univers dans la forme ordonnée du château, le travail qu’elle a fait a contribué à augmenter l’entropie de l’univers total.

C’est pourquoi la deuxième principe et l’entropie sont importantes pour nous. Il faut travailler, c’est à dire dépenser de l’énergie (la capacité de travail) pour réduire l’entropie d’un coin de l’univers, tout en augmentant l’entropie de l’univers entier. Mais à la longue, la nature trouvera toujours le moyen de faire échouer nos projets. On est permis de trouver ça un peu triste.

Et l’œuf

On peut voir maintenant le rôle joué par l’entropie dans les événements journaliers, comme un œuf qui se casse. Ou plutôt que je casse, par maladresse. L’œuf entier est une structure bien ordonné, l’œuf cassé est dans un état bien moins organisé, donc, de plus grande entropie.

Mais si je casse l’œuf exprès pour faire une omelette, l’œuf bien remué est encore plus désorganisé et donc dans un état d’entropie encore plus grande.

On peut dire que le temps ne recule pas, mais qu’il avance, parce que l’entropie peut ainsi augmenter encore, comme dans l’exemple suivant…

Le corps humain

Entropie = mort ! Et oui. Nos corps, avec leurs cellules, tissus et organes sont des objets biologiques très ordonnés. Nous ne maintenons cet ordre que par des dépenses d’énergie. Celle-ci vient de la nourriture que nous mangeons, que nos organes et cellules digèrent et réduisent en éléments énergétiques, notamment en ATP12, qui sert à transmettre l’énergie qu’il contient aux cellules pour faire fonctionner le métabolisme de tout le corps, l’ingénierie électrique des neurones et la flexion des muscles. Mais avec l’âge, le désordre arrive en forme de petites douleurs, d’arthroses et de choses plus graves. Et la mort est suivi par la désintégration (désordre) totale.

Plus abstrait – de l’information

Nous avons vu que l’entropie est une mesure du nombre de micro-états indistinguables qui corréspondent au même macro-état d’un système. Echanger deux chaussettes roses ou deux grains de sables et on ne remarque pas la différence. L’entropie corréspond donc à une manque d’information, des données qu’on pourrait connaître mais qu’on ignore. Comme si le grain de sable numéro 2.143.768 est au-dessus du numéro 3.472.540 ou le contraire. Donc, le plus élévé l’entropie, le moins d’information qu’on a sur le micro-état qui sous-tend le macro-état.

L’entropie est donc une mesure de l’information cachée dans un système.

Mais on ne va pas entrer dans la théorie de l’information ici.

Et enfin, l’univers

Les galaxies, les étoiles, notre système solaire, notre bonne vieille terre13 – tous des systèmes ordonnées par la dépense d’énergie d’origine nucléaire dans les étoiles ou dans l’espace même (champs gravitationnels ou électromagnétiques, champs d’origine quantique). Un jour, l’entropie va gagner là aussi. Même si l’espèce humaine trouve refuge ailleurs avant que notre soleil explose dans cinq milliard d’années14, l’univers entier deviendra à la longue diffus, froid et ténébreux. Carpe diem !

Cela nous laisse quand même le temps de réaliser (ou pas) nos rêves de gloire ou de richesses, d’art ou de prouesses sexuelles, de connaissances ou d’humanité afin de prouver à nous-mêmes que nous sommes capables de dépasser notre héritage évolutionnaire15 et, par notre propre énergie, de faire reculer – provisoirement – l’entropie de notre petit coin de l’univers.1