Let us look at examples of brain circuits by regarding the different sense organs. But first, a word about maps.

Cortical maps

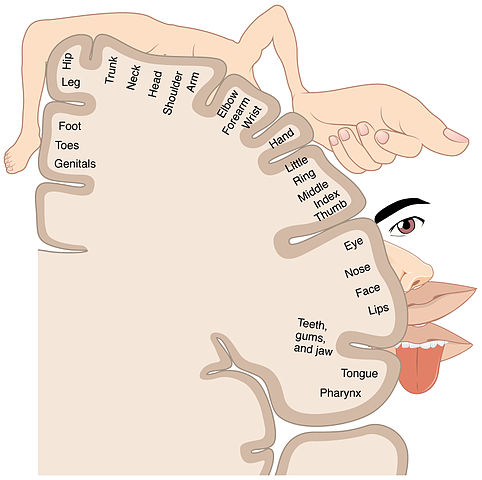

In many cases, sensory data arriving in the cortex do so in such a way as to define a cortical map. In general, adjacent sensory areas are also adjacent to each other on the map, although the map may be so grossly distorted that this is not always the case. In the process of somatotopy, points on the surface of the body are mapped onto a brain structure. It is like a map of the Earth projected onto a flat surface, but even more distorted because the size of the cortical area attributed to a given area of skin surface is proportional to its importance rather than to its original size. In a somatotopic map, the area of cortex corresponding to the lips is huge in comparison to that of the trunk or hip, as is obvious in the following figure.

Somatotopic map, from Openstax College via Wikimedia Commons

Since adjacent areas of the map do not always correspond to adjacent organs, leakage or “cross-talk” between the areas may lead to confusion of senses, as in the case of synesthesia, in which a person may see numbers or hear musical tones as possessing colors.1Could they also be the basis of metaphor?

Similarly, the retina of the eye and the cochlea of the ear, sensitive to frequencies of, respectively, light and sound, map via retinotopy and tonotopy. There are multiple somatotopic and retinotopic maps in the brain.

There are differences between species. The vibrissae of cats and rodents receive a much larger share of the map than do our upper-lip whiskers.

Vision

Vision is by far our most important sense, as is made clear by the huge part of the brain which processes visual data: Almost half of the neocortex is taken up with visual processing.

Vision in the eye

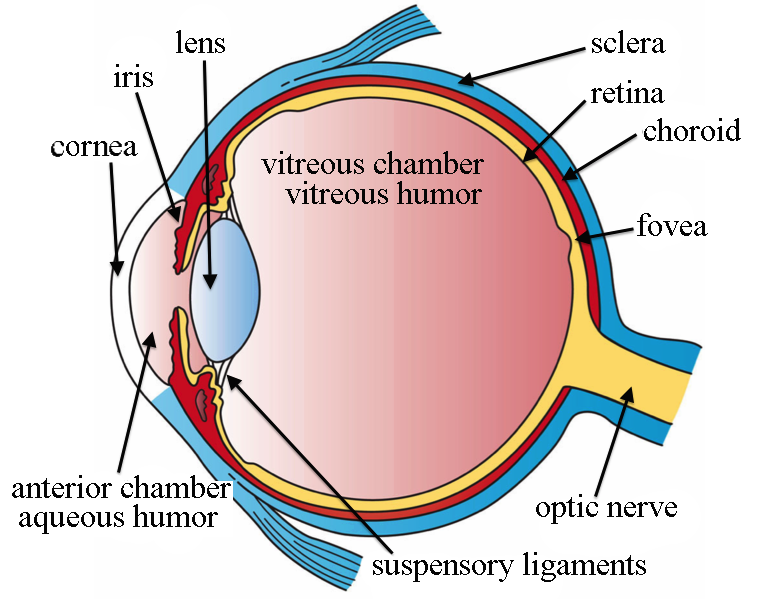

It starts when light of wavelengths between about 390 nm and 700 nm (ultraviolet to infrared) passes through the cornea, which does most of the focusing of the light; then through the iris and the lens, which does some more focusing; through the vitreous humor and onto the photoreceptors of the retina, at the back of the eye.

The retinas are actually parts of the brain, growing out of the diencephalon during embryonic development. The visual receptors in retinas are photoreceptors, like metabotropic ion channels but sensitive to light. Light energy causes a change in a molecule in the receptors and this brings about a reduction in the number of depolarizing ion channels. There are two types of photoreceptors:

- Cones are receptive to colors, some to red, some to blue and some to green (approximately). But they are much less sensitive to light than are rods. They are more populous in the center of the retina.

- Rods are more sensitive to light (1000 times the cones), but do not distinguish colors. They are more populous around the periphery of the retina.

Eye structure, by Holly Fischer via Wikimedia Commons

In more detail, a photoreceptor cell is normally at a potential of around -30 mV, due to the existence in the cell membrane of open Na+ channels. These channels in effect allow a current, called the dark current, which works against the Na-K pump and partially depolarizes the cell. When light impinges on the photoreceptors, a pigment (rhodopsin, in rods, three similar but different types of opsins, in cones) momentarily changes its form (and color). This stimulates a G protein to activate an enzyme which in turn reduces the concentration of the second messenger cGMP (cyclic guanosine monophosphate) and this causes Na+ channels to close. Less input of Na+ means that the cell’s membrane potential becomes more negative: The cell hyperpolarizes and less glutamate is released.

So photoreceptors work backwards to what one might suppose. It is darkness, not light, which causes the receptor to depolarize and release more of the photoreceptor’s neurotransmitter, glutamate. In the human eye, only the ganglia (and a few amacrine cells) form action potentials. The others simply change their membrane potentials and release more or less glutamate, which is therefore the signalling agent.

The number of receptors on the retina, on the order of 100 million, is far too great and sends information too fast for it all to be sent to the brain, especially as there are only around a million axons leading to the brain. So, in between light reception by the photoreceptors and output from ganglion cells to the brain, some information processing takes place within the retina.2This can be likened to the Control Data Corporation supercomputers of the 70s (which I knew well), which had one central processor for mathematical and logical calculation, and a number (on the order of 10) of smaller, independent so-called peripheral processor units which handled input-output and storage devices.

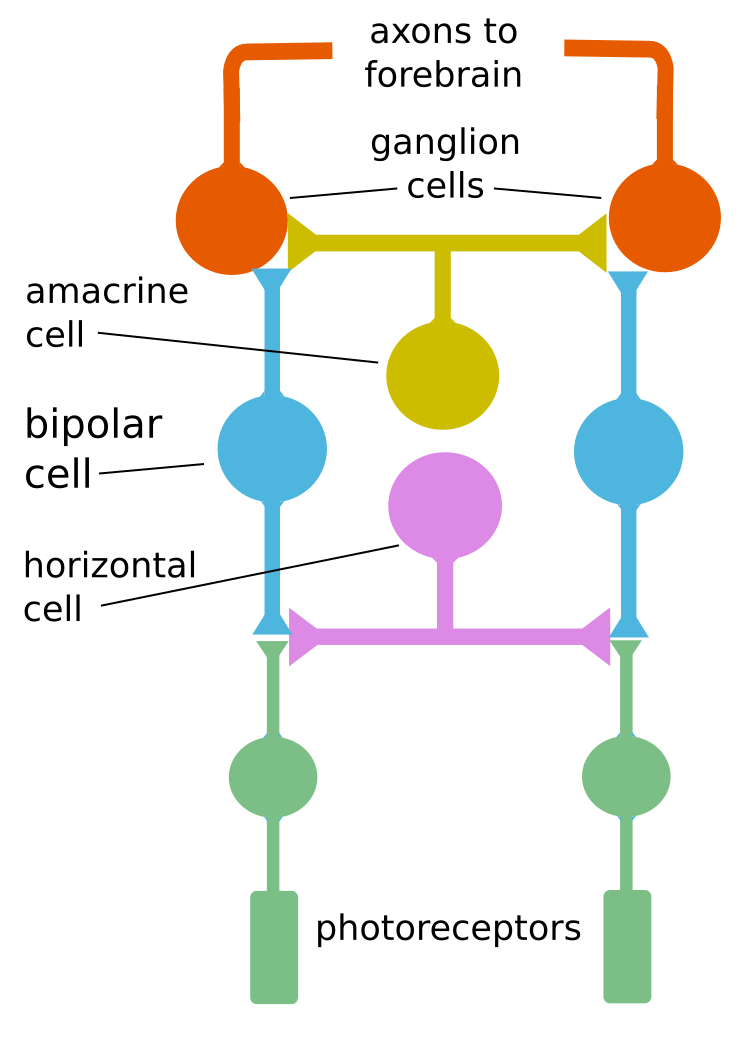

As a result, each optic ganglion reports an average of the signals from the receptors which feed it, its receptive field. This process occurs as the output from the photoreceptors passes through two other types of cells (horizontal and bipolar) before they reach the output cells, the ganglia. Quite ingeniously, these cells enable receptors in the center of the ganglion’s receptive field to signal light reception with the opposite sign of receptors around the center. So the net result to the ganglion is the difference in the overall signals from the center and the surrounding area. This may be difference in intensity or in color. No difference between the center and surrounding areas means no action potential generated by the ganglia and a subsequent reduction in neural traffic. Such a receptive field is called a center-surround receptive field. Such lateral inhibition, reduction of a central signal by peripheral ones, is also characteristic of information processing in other sensory pathways.

In yet more detail, cells in the retina are arranged in layers. Output from the photoreceptors passes through horizontal cells to bipolar cells, which transmit them to the next layer of processing. There are two types of bipolar cells:

- OFF bipolar cells are glutamate-gated ion channels which depolarize on stimulation by glutamate.

- ON bipolar cells have G protein-coupled receptors and are hyperpolarized by glutamate.

The logic behind these names is that the OFF cells depolarize when light is off (more glutamate); the ON cells, when light is on (less glutamate).3Such logic can only be described by the French word tordu, twisted. Anyway, you don’t have to remember this. If you insist, though, it goes like this: Light on means the receptor emits less glutamate, which hyperpolarizes (turns off) the OFF cells and depolarizes (turns on) the ON cells. Light off means the receptor emits more glutamate which which depolarizes (turns on) OFF cells but hyperpolarizes (turns off) ON cells. Sheesh. The important point is that there are two types of bipolar cells with opposite reactions to a given input.

Remember: Only photoreceptor cells are light-sensitive and only retinal ganglion cells (RGCs) send signals to the brain. The bipolar, horizontal and amacrine cells are components of the “hardware” which “computes” what gets sent from photoreceptors to RGCs.

Each bipolar cell is connected in two ways:

- The center of the cell’s receptive field is connected directly to a group of receptors, the number of receptors per receptive field varying from one, in the middle of the retina, to thousands on the periphery, with inverse variations in resolution;

- The surrounding area of the RGC’s receptive field is connected indirectly through the intermediary of horizontal cells which invert the effect of light from the surrounding area, giving it opposite polarity to that of the center.

So the RGC receives a total signal which is the amount of light in the middle of its receptive field minus an adjusted amount of light in the surrounding area.

The above figure shows how one horizontal cell can link two receptors. The resulting algebraic sum of the two fields gives the difference of the polarizations and may be positive (depolarized) or negative (hyperpolarized).

But there is more. At the next level, the inner plexiform layer, there are amacrine cells which have multiple functions. Since they do not seem to participate in the center-surround process, we will not consider them further.

The result of retinal information processing is that ganglion cells have center-surround receptive fields, functioning as follows:

- An ON-center ganglion emits an action potential when light strikes only the middle of its receptive field.

- An OFF-center ganglion emits an action potential only when light is off in the center of its receptive field.

Only a difference in illumination over the center-surround receptive field will generate a ganglial action potential. This is an effective way of limiting the traffic over the optic nerves.

It is also a way of detecting edges, as the center and surrounding areas do not quite cancel out in the case of all light or all dark, but have different results in these two cases. So movement of an edge across this retinal receptive field gives rise to a characteristic sequence of action potentials. It also gives rise to the illusion of a gray square’s appearing lighter against a dark background, but darker against a light one.

A similar mechanism is used in some color-detection ganglion cells. For instance, an R+G– type ganglion cell has a red-sensitive center field and a green sensitive surround field, so that green around red inhibits signaling of the red. So white light, which contains all wavelengths, would cause the two fields to cancel out.4I find the functioning of retinal cells is as genial as some of the other marvels of the scientific world, like the electron transport chain.

Adaptation to sudden light or dark has several components. One minor one is the opening and closing of the pupil to allow more or less light to come in. More important – and more amazing – is the fact that the circuits of bipolar and horizontal cells are re-arranged to pass information from more rods to the ganglia, in the case of dark adaptation. Also, more fresh, unused rhdodpsin must be generated. The opposite processes take place in the case of light adaptation.

So one ganglion cell receives input from many photoreceptors, fewer at the center of the retina, the fovea, and more on the periphery. As a result, resolution Is better in the center and light sensitivity is better on the periphery.

Vision in the brain

Each ganglion reports an average of the signals from the receptors which feed it. More intra-retinal processing takes place so that specific features, such as color or motion, are sent on to the brain – to the thalamus, of course, but also to over a dozen other areas. Among these are zones like the superior collicus for controlling eye movements, or saccades, retinal slip and visual tracking (keeping your eyes on a moving target); the hypothalamus for control of circadian (night and day) rhythms; and the midbrain for opening of the pupil.

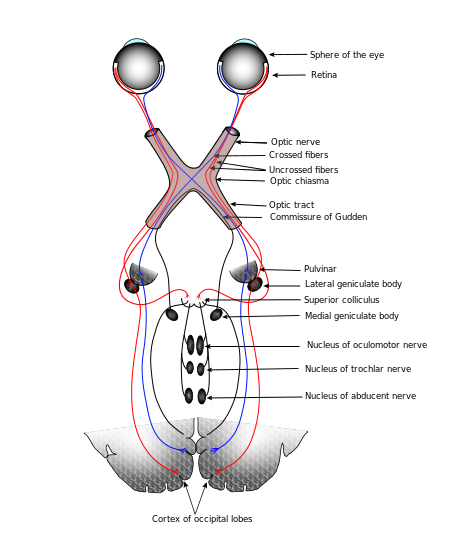

The part of the thalamus receiving vision information is the dorsal lateral geniculate nucleus, or dLGN or, more simply, the LGN. Optic nerves (bundles of axons) carry information from the left side of each eye to the right side of the LGN, and vice versa, crossing over at a point called the optic chiasma. So one entire side of the visual field, rather than one entire eye, is processed by the opposite side of the brain. The nerves carrying visual info from the chiasma to the LGN are called the optic tracts, to distinguish them from the optic nerves between the eye and the chiasma. Amazingly, after all this mixing of signals in the same optic tract, all gets sorted out again in the LGN. The information arrives in Visual Area 1 (V1) in the occipital lobe and travels through V2 and V3 and others, where different features are analyzed: orientation, movement direction, rotation, translation, parallax, colors, faces and more.

Connections of the human visual system, after Gray’s Anatomy via Wikimedia Commons

Each retinal photoreceptor, rod or cone, is composed of an outer segment (farthest from the lens, in other words, at the back), an inner segment, a cell body and a synaptic terminal, which connects to bipolar and horizontal cells. In rods, light reception takes place in a column of disks which are stacked parallel to each other. Light is detected in the disks by receptor proteins called opsins (such as rhodopsin in the cones). An opsin is like a metabotropic channel in the disk membrane, but its receptor is blocked by an agonist5A substance which binds to a receptor. called retinal, which is a derivative of vitamin A.6Hence, the importance of vitamin A for vision. When EM radiation (light) is absorbed by the retinal, it changes the conformation (shape) of some of the bonds from cis to trans, and this changes its shape and color (so the process is called bleaching). This triggers the series of processes leading to closing of Na+ channels and hyperpolarization of the cell.

Considering the existence of the photosynthetic pigment rhodopsin across space and time reveals aspects of the evolution of vision.

As we have seen in the chapter on biochemistry, formation of an eye at a particular place in all animals depends on the homeobox gene Pax6. Remember that Pax6 codes for a transcription factor, not for a gene. Pax6 also influences brain formation. Not only does Pax6 occur in almost all vertebrates and invertebrates, but so do opsins. What is more, the human retinal ganglion contains a kind of opsin called melanopsin, which has a role in circadian rhythms. But melanopsin is characteristic of invertebrate photocells. All these observations suggest a common source for photocells across the vertebrate-invertebrate world.

So it is generally accepted that photocells in vertebrates and invertebrates evolved from a common ancestor.7Lane (2010), 199. Somewhere along the line, this cell was duplicated, one type to become eyes, the other, circadian detectors. The eye was originally an area of light-sensitive cells on the surface of the skin. Then epithelial folding moved the cells into a cavity, lenses formed, and so forth, on up to animal eyes today.

Here’s the punch line: Rhodopsin is found in chloroplasts, the organelles in plant cells which convert energy from sunlight into ATP. So that the original photosensitive organism was also the ancestor of chloroplasts and was very likely our old pal cyanobacteria!

Erbernochile erbeni (a trilobite) eye detail, Large compound eye with “eye-shade”. By Moussa Direct Ltd, via Wikimedia Commons.

The first animals to have image-forming lenses (and there were a lot of them, lenses and animals) were probably trilobites, whose eyes came to be composed of multiple oriented calcite lenses. These eyes date from 540 Mya, just after the start of the “Cambrian explosion”. With certain assumptions, it is possible to show that eyes could develop quickly enough that, in tandem with size increase due to enhanced oxygen content in the air, predators developed. So the eye may have been the match which lighted the Cambrian explosion.8Lane (2010), 185.

Now continue with the senses — hearing, touch and taste.

Notes

| ↑1 | Could they also be the basis of metaphor? |

|---|---|

| ↑2 | This can be likened to the Control Data Corporation supercomputers of the 70s (which I knew well), which had one central processor for mathematical and logical calculation, and a number (on the order of 10) of smaller, independent so-called peripheral processor units which handled input-output and storage devices. |

| ↑3 | Such logic can only be described by the French word tordu, twisted. Anyway, you don’t have to remember this. If you insist, though, it goes like this: Light on means the receptor emits less glutamate, which hyperpolarizes (turns off) the OFF cells and depolarizes (turns on) the ON cells. Light off means the receptor emits more glutamate which which depolarizes (turns on) OFF cells but hyperpolarizes (turns off) ON cells. Sheesh. |

| ↑4 | I find the functioning of retinal cells is as genial as some of the other marvels of the scientific world, like the electron transport chain. |

| ↑5 | A substance which binds to a receptor. |

| ↑6 | Hence, the importance of vitamin A for vision. |

| ↑7 | Lane (2010), 199. |

| ↑8 | Lane (2010), 185. |